Revolutionizing Diffusion Techniques: A 256x Efficiency Boost

Written on

Introduction to Diffusion Models

Denoising diffusion probabilistic models (DDPMs), such as DALL·E 2, GLIDE, and Imagen, have set benchmarks in generating high-resolution images. However, a significant drawback of these models lies in their inference, which necessitates repeated evaluations of both class-conditional and unconditional models, making them computationally intense and less practical for various real-world scenarios.

Innovative Distillation Approach

In the recent paper titled "On Distillation of Guided Diffusion Models," researchers from Google Brain and Stanford University introduce a groundbreaking method designed to enhance the sampling efficiency of classifier-free guided diffusion models. This innovative technique maintains a performance level akin to the original models while dramatically reducing the sampling steps by up to 256 times.

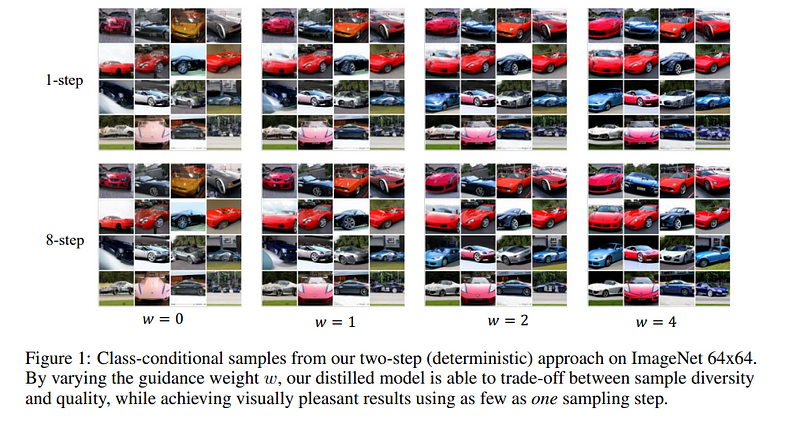

The distillation process outlined by the researchers consists of two main phases. Initially, a trained guided teacher model is utilized, where a single student model replicates the combined outputs of the teacher's two diffusion models. Subsequently, this student model undergoes a progressive distillation to create a model that requires fewer sampling steps. The final distilled model is capable of adapting to various guidance strengths, thus allowing for efficient balances between the quality of samples and their diversity.

Sampling Methodology

The proposed method incorporates both a deterministic sampler and an innovative stochastic sampling technique. The process begins with one deterministic sampling step, which operates at double the length of the original steps, followed by a stochastic step that introduces noise while utilizing the original step length. This novel approach draws inspiration from Karras et al.'s earlier work titled "Elucidating the Design Space of Diffusion-Based Generative Models."

Empirical Results

In their empirical analysis, the researchers implemented their technique on classifier-free guidance DDPMs and carried out image generation experiments using the ImageNet 64x64 and CIFAR-10 datasets. Their findings indicate that the new method can produce "visually decent" samples with as few as one sampling step and achieve FID/IS (Frechet Inception Distance/Inception) scores comparable to those of the baseline models, all while being up to 256 times faster.

This research illustrates the significant potential of the proposed approach in mitigating the high computational demands that have hindered the practical use of denoising diffusion probabilistic models. For further reading, the paper "On Distillation of Guided Diffusion Models" is available on arXiv.

Conclusion

We understand the importance of staying updated on the latest research and advancements. Subscribe to our well-regarded newsletter, Synced Global AI Weekly, for weekly insights into the AI landscape.

In this video, "Welcome to Stanford," viewers are introduced to the vibrant Stanford University environment, showcasing its rich academic culture and innovative spirit.

The "Stanford University Campus Tour" video offers an engaging walkthrough of the campus, highlighting key landmarks and facilities that enhance the student experience.