Understanding the Signs of AI-Generated Articles

Written on

Chapter 1: The Rise of AI in Content Creation

With the increasing use of AI-powered tools like ChatGPT, it's becoming more common to encounter articles that appear to be original yet may have been generated by artificial intelligence. This guide outlines key indicators to help you determine whether the article you’re reading was crafted by an AI.

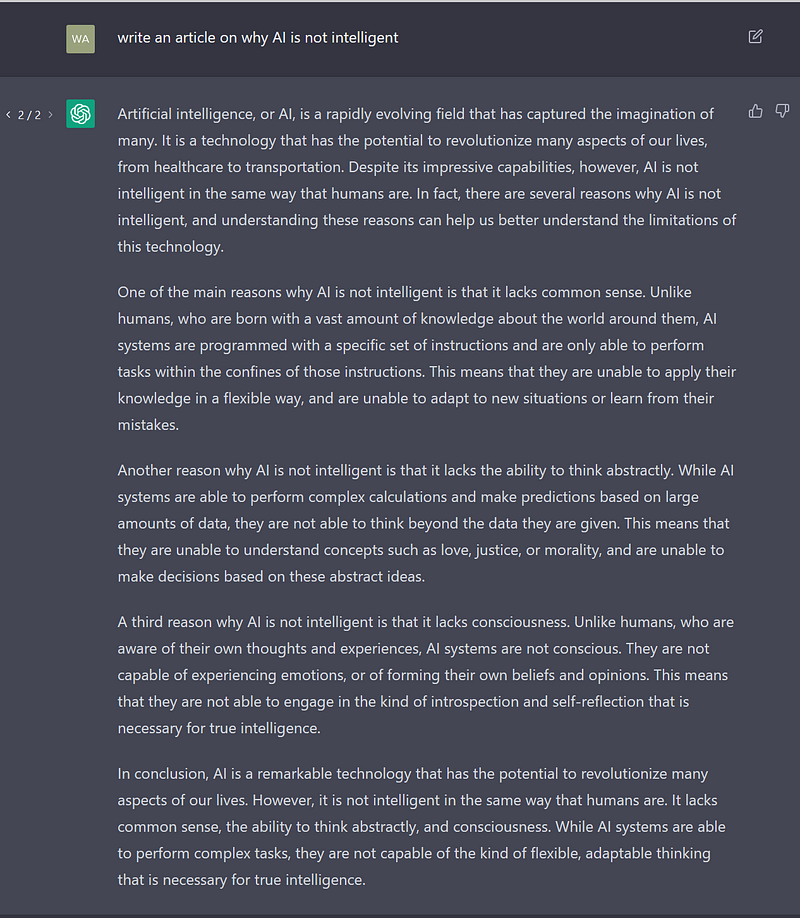

In recent days, I experimented with ChatGPT to evaluate its ability to produce writing that could easily be mistaken for human authorship. For instance, I prompted the AI with the request to “compose an article on the topic of why AI lacks true intelligence,” yielding some interesting results:

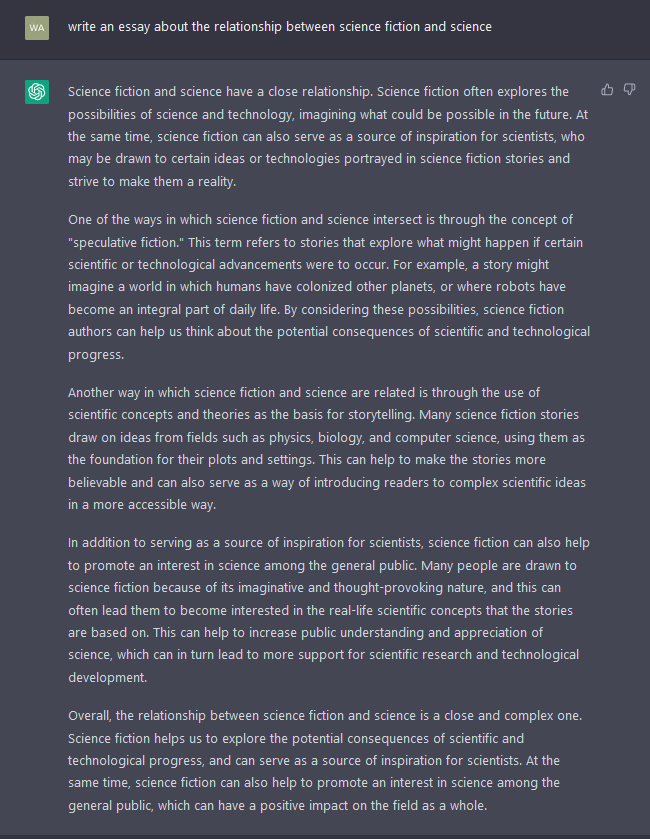

I also asked it to “create an essay on the connection between science fiction and scientific advancements”:

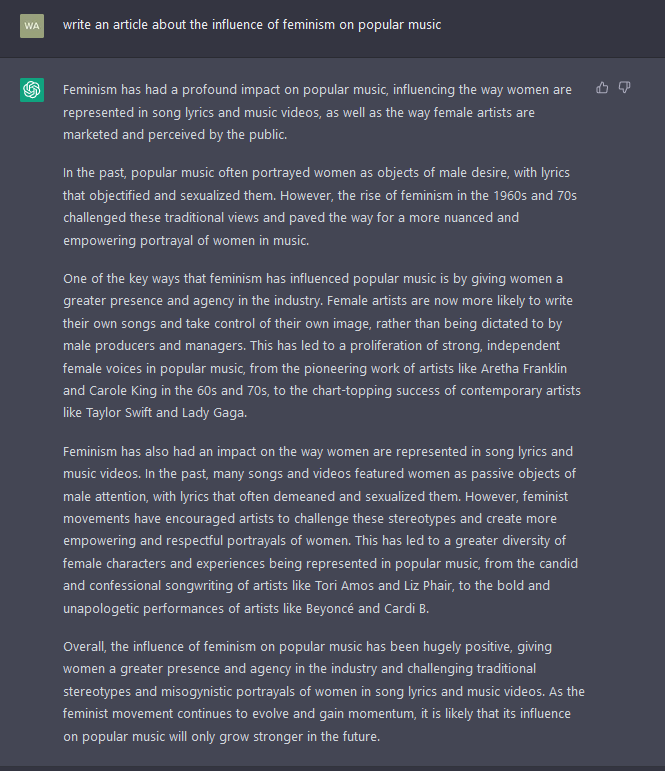

Lastly, I requested an article about “the impact of feminism on contemporary music”:

Despite exploring diverse subjects, the structural patterns in ChatGPT's responses revealed similarities that often characterize AI-generated writing. These consistencies stem from the AI's training as a language model, which involves analyzing vast amounts of data to ascertain relationships and probabilities between concepts.

This also suggests that AI writing should not be misconstrued as personalized; rather, it reflects statistical relationships derived from a variety of human-written texts that informed its training. The reinforcement learning applied to ChatGPT's development further enhances its ability to produce plausible-sounding text, making it more challenging to identify machine-generated content.

However, there are still several telltale signs that an article on Medium or similar platforms may be the product of AI (or simply lacking in depth):

Section 1.1: Absence of Personal Perspective

As noted in my first inquiry, AI models cannot experience emotions or form genuine beliefs. Consequently, AI-generated writing typically lacks emotional expressions like joy, anger, or strong opinions. While it may occasionally adopt a first-person perspective if specifically instructed, it usually maintains a neutral tone devoid of personal insights. Additionally, unique personal anecdotes or identifiers are typically absent, given the AI's reliance on extensive datasets.

Subsection 1.1.1: Formulaic Structure

In the examples I received, ChatGPT adhered to a rigid five-paragraph format: an introduction with a significant claim, a conclusion often prefaced by phrases like “in conclusion,” and three body paragraphs outlining points sequentially. This methodical enumeration fails to demonstrate any nuanced relationships or conflicts among the presented ideas, which could otherwise enhance the depth of the discussion.

Section 1.2: Lack of References

Another clear indicator of AI-generated text is the absence of citations or references. For instance, when ChatGPT discussed “speculative fiction,” it failed to provide context or sources for this concept. Writing that lacks citations and includes broad, unsupported claims is likely AI-produced or, at the very least, lacks insightful analysis.

Chapter 2: The Implications of AI Writing

The first video, "Here's How to Detect AI Written Content | Push Of a Button," offers insights on identifying AI-generated texts through various methods.

The second video, "How to tell if text was written by AI - (AI-generated text identification)," further explores strategies for distinguishing between human and AI authorship.

In conclusion, while AI advancements are exciting, they can also facilitate fraudulent practices. For instance, students may use tools like ChatGPT to complete assignments without engaging in genuine research or revision. This capability can obscure the authenticity of their work, making it difficult for educators to identify plagiarized content.

Moreover, AI can be exploited for cyber fraud, aiding criminals in crafting deceptive communications that may more effectively engage victims. The adaptability of AI writing to social media formats can perpetuate misinformation regarding authorship, undermining the integrity of original content creators.

Ultimately, AI-generated writings often reflect the most generic statements, stifling intellectual curiosity and critical thinking. While these texts may mimic human expression, they promote a mindset more akin to machine processing than genuine human thought.