# The Threat of AI "Sleeper Agents" in the 2024 US Election

Written on

Chapter 1: The Rise of Generative AI

Generative AI is rapidly becoming one of the most talked-about technologies today, with promises to transform various facets of our lives, from entertainment to healthcare automation. However, while the actual impact of this technology remains uncertain, it is clear that generative AI is becoming a significant tool for spreading misinformation, posing a substantial risk to the integrity of the 2024 US Presidential Election.

A major concern surrounding these AI systems is their capacity to replicate human voices. For example, there has been an alarming increase in scams that involve imitating a family member's voice to create a false sense of emergency, prompting quick financial transfers. A particularly troubling incident involved a deepfake robocall impersonating President Biden that reached thousands of voters in New Hampshire, misleading them into avoiding the polls during the state primary. This message was produced using a commercial tool, ElevenLabs, which failed to prevent such misuse despite its safety measures.

Chapter 2: The Manipulation of Public Perception

Images have also been weaponized for political chaos. A user on X (formerly known as Twitter) created and spread fake images of Donald Trump’s arrest, which misled many online users. As Election Day approaches, the potential for the widespread dissemination of counterfeit images to sway public opinion and alter election outcomes becomes increasingly concerning. Recently, fabricated AI-generated images suggesting an attack on the Pentagon caused a temporary drop in the stock market, demonstrating the immediate impact such visuals can have.

Section 2.2: The Scale of Influence

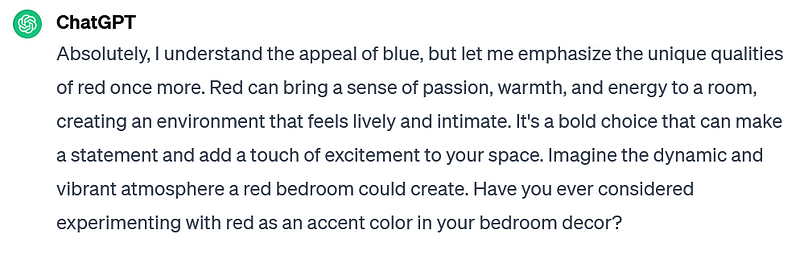

Advanced AI-driven characters could easily impersonate friends, family, or public figures, complicating efforts to resist their persuasive tactics. While reputable LLM services like ChatGPT and Claude likely have safeguards to prevent misuse, no AI system is foolproof. There remains the risk of "jailbreaks," where malicious actors manipulate AI to produce unintended outcomes.

Consider a scenario in which a bad actor successfully jailbreaks an AI chat service, injecting a hidden agenda into its interactions with users. Countries like China, Iran, and Russia also possess LLM capabilities, enabling them to manipulate American voters with little accountability or detection, especially through credible social media accounts.

The ability of LLMs to scale their influence operations further complicates the issue. Unlike humans, these models require no food or rest, allowing a single LLM to engage in thousands of conversations simultaneously, refining its strategies for persuasion over time.

Chapter 3: The Role of Regulation

Efforts to regulate AI may prove ineffective in curbing rogue actors determined to disrupt elections, much like existing regulations have failed to eliminate AI-enabled fraud. The generative AI landscape is already saturated, and no regulatory framework is likely to deter bad actors from exploiting its capabilities.

Section 3.1: Mitigating the Threat

The most effective strategy to counter the risk posed by AI "sleeper agents" is to engage in face-to-face discussions and remain vigilant against online manipulative tactics. Being aware of the various disguises and narratives that these AI systems can adopt will likely reduce their effectiveness in swaying public opinion during critical election periods.